|

|

Samsung S21 smartphone has a special camera with longer focal length suitable to image small objects. Wikipedia reports: 10 MP, f/4.9, 240 mm (periscope telephoto), 1/3.24", 1.22Ám, dual pixel PDAF, OIS, 10x optical. Many people test this camera on the Moon, finding an incredible quantity of details, similar to small telescopes. Maybe this is too good to be real, as pointed out in many online posts. Example 1. Example 2. They show that the most important part of the image quality is done by an AI algorithm, recognizing the Moon and "optimizing" the result to show suitable details. But many online tests simply image a blurred high-res Moon shot.

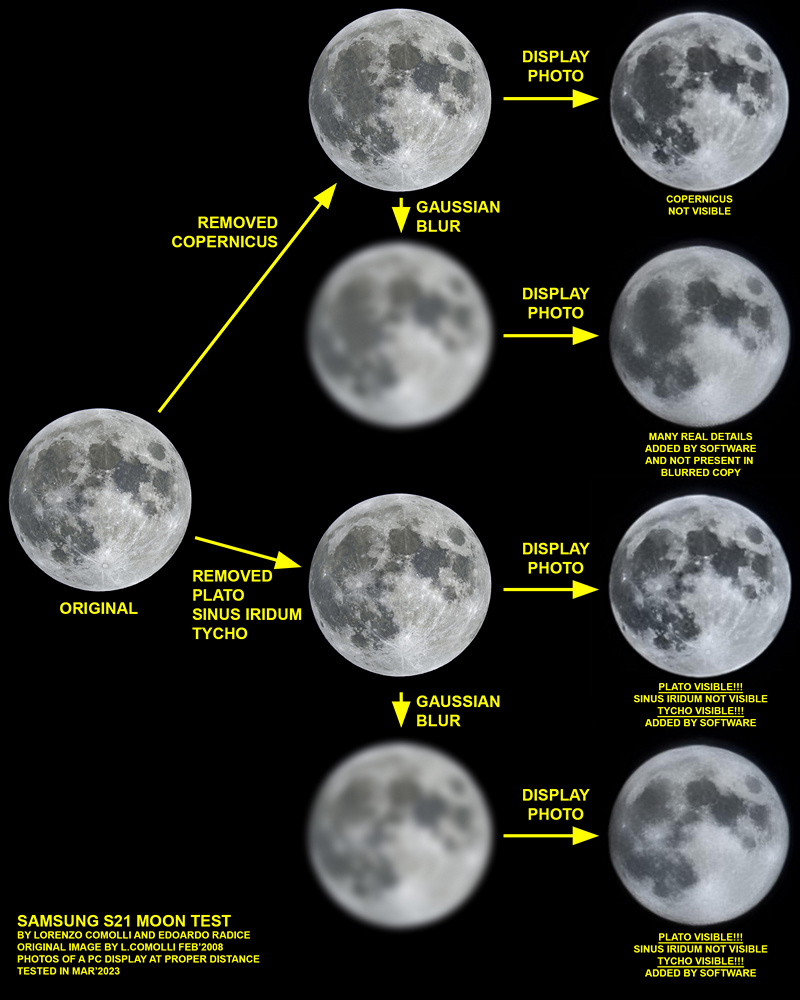

In our test we've done a step further, we removed some details (craters), to see if Samsung AI software adds them in the result. The short answer is: YES! Samsung AI software adds some craters in the right place (!) even if there were completely removed from the image, and even after heavy blurring.

Clearly Samsung AI is adding details that should be there, even if they are not there. Imagine if a meteorite strikes on the Moon and simply cancel Plato crater: the Samsung S21 camera and AI software will continue to show it!

To amateur astronomers like us, this strongly remember the never ending visual observers vs. astro photographers debate, with the second ones accusing the first ones to visually see impossible details and colors through their telescopes, accusing that the reason is not because they really see, but because they remember the photos of Hubble Space Telescope or planetary probes.

It seems the AI implemented by Samsung, that has been declared to be trained on high res Moon shots, is simply REMEMBERING details seen during training and it is adding to the processed image even if they were not present.

In my opinion the Samsung S21 Ultra Moon images can clearly be considered a fake.

This poses also a more philosophical question: AI is more like a human remembering what should be there, and not an objective software image enhancement showing what it is really there. Where this will take us?

Samsung S21 Ultra Moon test. Click on the image for highres.

Some craters has been removed with Clone Stamp Tool in Photoshop starting from a highres image of the Full Moon made in Feb'2008. Then the image has been projected on a PC display and imaged from a proper distance D = 114 * d (where d is the diameter of the Moon on the display), so that the angular size is the same as in the sky. An additional step has been done by heavily blurring with "Gaussian Blur" filter in Photoshop. The Samsung camera software was set with "Optimization" turned on. Small craters has been added by S21 software even if they were not present in the photographed image!!! In this example, Plato and Tycho are clearly visible even if they were removed. This demonstrates that the AI software is adding non-existent details, with the only reason that they "should" be there. Bigger craters such as Sinus Iridum or Copernicus are not added.